Qihua Dong's

I am currently a PhD at Northeastern University, Boston. I graduated from City University of Hong Kong with a major in computer science and a minor in math.

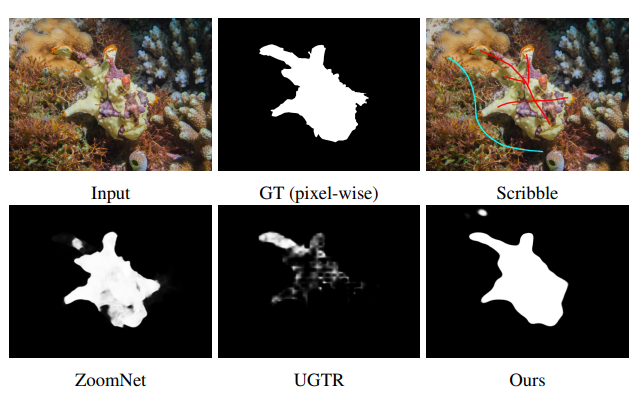

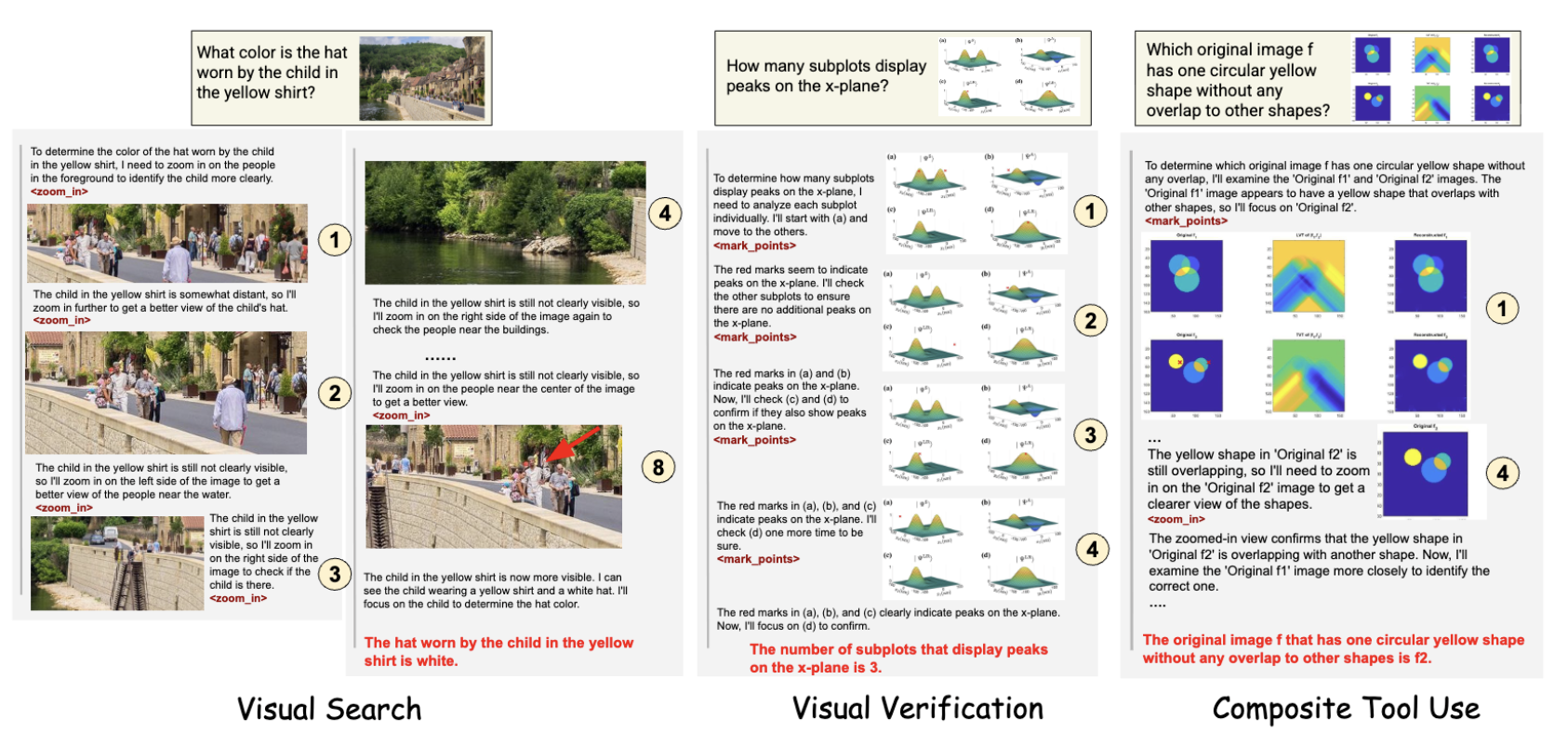

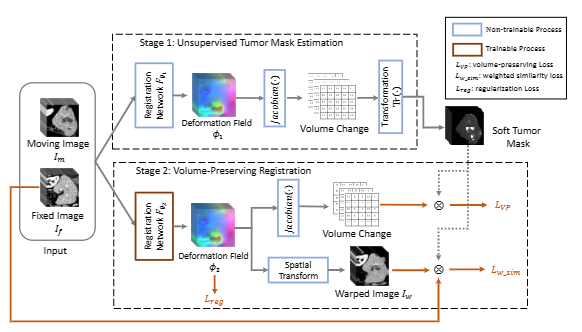

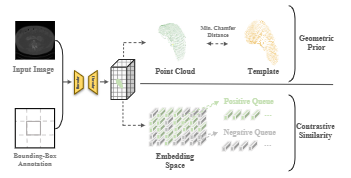

My research interests focus on reasoning and visual understanding in (M)LLMs, including reinforcement learning and tool-use agents. My prior experience spans multimodal LLMs, image segmentation, and medical image analysis.

ps: You may reach me by email, twitter or github. Welcome to collaborate!

News

| Feb 2026 | Our new preprint Fine-T2I is released: Fine-T2I: An Open, Large-Scale, and Diverse Dataset for High-Quality T2I Fine-Tuning. The dataset is available on HuggingFace and was trending #1 in datasets! |

|---|---|

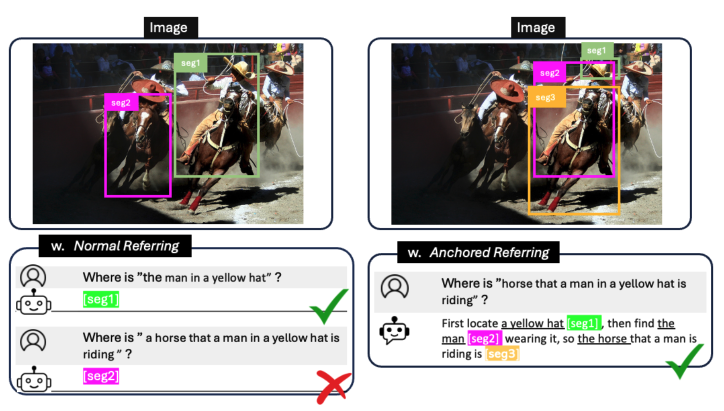

| Jan 2026 | One paper accepted at ICLR 2026: Ref-Adv: Exploring MLLM Visual Reasoning in Referring Expression Tasks! |

| Oct 2025 | Honored to receive the Outstanding Reviewer Award at ICCV 2025! |

| May 2025 | Excited to join Amazon AGI Foundation Team as Applied Scientist Intern, working on visual reasoning with Hao Yang! |

| May 2024 | Thrilled to join Adobe Research as Research Intern, working on MLLM for referring segmentation with the kind and inspiring Luis A. Figueroa! |

Projects

The authors with * contributed equally to the work-

-

-

IEEE Transactions on Medical Imaging, 2023

IEEE Transactions on Medical Imaging, 2023